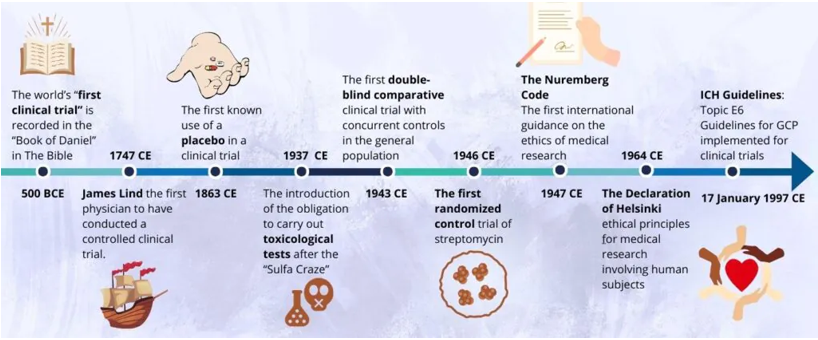

Healthcare research has come a long way in the past few hundred years, from simple observations to modern large scale intervention studies involving thousands of participants. Each day, healthcare professionals and scientists finding ways in developing new and improved methods for screening, preventing, diagnosing, and treating illnesses, as well as in organizing and delivering healthcare services.

The evolution of clinical research traverses a long and fascinating journey. Clinical trials have been a part of the medical landscape for 275 years, creating opportunities for physicians to find viable treatments for a range of conditions.

The progression of scientific methods—such as intervention comparisons, randomization, blinding, and placebo controls—has driven ongoing advancements in clinical care. These techniques have not only enhanced the quality of research but also ensured ethical considerations. Along the way, there have been challenges but also triumphs. The clinical trials of decades – or even centuries – past brought the industry to where it is today.

History of clinical trial:

World’s First Clinical Trial

The “Book of Daniel” in the Bible, dated around 500 BC, describes an early example of a nutritional experiment conducted by King Nebuchadnezzar. The king ordered a diet of meat and wine for his subjects, believing it would ensure optimal physical health. However, several young men chose a vegetarian diet of legumes and water instead. After ten days, the vegetarians appeared healthier, leading the king to permit their continued diet. This experiment is considered one of the earliest instances of using an open, uncontrolled study to guide public health decisions.

In 1025 AD, the Persian physician Ibn Sīnā, known in the West as Avicenna, made significant contributions to clinical research with his encyclopaedic work, the “Canon of Medicine.” He proposed that remedies should be tested in their natural state on individuals with uncomplicated diseases. His guidelines emphasized a systematic approach to drug testing, reflecting principles that align with contemporary clinical trials.

Both historical examples highlight the evolution of clinical experimentation, showcasing early efforts to understand and improve health through systematic investigation. These foundational practices laid the groundwork for modern clinical research methodologies.

A Happy Accident: Trial of a Novel Therapy:

The first clinical trial of a novel therapy was conducted accidentally by the famous surgeon Ambroise Pare in 1537. After running out of boiling elderberry oil for treating battlefield wounds, Paré used an alternative ointment made of turpentine, egg yolk, and rose oil.

Despite his initial concerns, the ointment proved more effective than the standard treatment, causing less pain and irritation while improving outcomes. This accidental discovery led Paré to abandon cauterization and marked a significant advancement in medical care through empirical experimentation. His observation set a precedent for future medical practices and innovations.

The modern era- from James Lind’s scurvy trial to randomization

James Lind, a Scottish surgeon, “Father of Clinical Trials” conducted the first modern controlled clinical trial in the 18th century to address scurvy among sailors. By assigning different dietary regimens to groups of patients, he discovered that oranges and lemons, rich in vitamin C, were most effective in preventing scurvy. Despite his findings, the use of citrus fruits was not adopted until 50 years later, with lime juice eventually replacing lemons due to cost.

In 2003, the James Lind Library was established to honor his work and promote understanding of fair treatment testing, leading to the designation of May 20 as International Clinical Trials Day. The Association of Clinical Research Professionals has organized this day to celebrate advancements in clinical research.

The first use of a placebo in a clinical trial – 1800

The arrival of the placebo marked another important breakthrough in the history of clinical research. The concept of the placebo began to take shape in the early 1800s, with the term first appearing in medical literature. It wasn’t until 1863 that U.S. physician Austin Flint conducted the first clinical study comparing a placebo to an active treatment for rheumatism. Flint’s study, described in his 1886 book, demonstrated how a dummy remedy could gain patient confidence, marking a key development in clinical trial methodology.

Next stage by following the Sulfa Craze of 1937, which resulted in 107 deaths from improperly prepared sulfa drugs, the Federal Food, Drug, and Cosmetic Act of 1938 was enacted. This legislation mandated toxicological testing for new drugs to ensure their safety before they could be marketed.

First Double-Blind Clinical Trial – 1943

In 1943, the UK Medical Research Council conducted the first double-blind comparative clinical trial to test patulin, an extract of Penicillium patulinum, for the common cold. The study was meticulously controlled, with both physicians and patients blinded to the treatment. Although patulin was not found effective, the trial established a model for future double-blind studies.

First Randomized Curative Trial – 1946

In 1946, nearly 200 years after James Lind’s first clinical trial, the UK Medical Research Council conducted the first randomized controlled trial of streptomycin for pulmonary tuberculosis. Faced with a shortage of the drug, they randomly assigned participants to treatment and control groups, eliminating biases in patient assignment. This landmark study established randomization as a standard practice in clinical trials and marked the beginning of a “new era of medicine.”

Evolution of Ethical and Regulatory Framework

In the early 20th century, scientific advancements in clinical trials accelerated rapidly, but the 1930s and 40s saw unethical experiments, including deliberately infecting a baby with herpes and testing drugs on prisoners, as well as atrocities in World War II concentration camps. These abuses highlighted the urgent need for a stronger focus on ethical standards in clinical research.

- Nuremberg Code – 1947:

The Nuremberg Code, established in 1947, was the first international set of ethical guidelines for medical research involving human subjects. It is a set of research ethics principles for human experimentation.

Thalidomide Tragedy in 1961 the drug was taken off from the market due to deformed limbs and defective organs in children who took thalidomide in pregnancy as a treatment of morning sickness.

- Declaration of Helsinki – 1964:

The World Medical Association has developed the declaration of Helsinki as a statement of ethical principles to provide guidance to physicians and other participants in medical research involving human subjects. The basic principles include respect for individuals, the right to make informed decisions, recognition of vulnerable groups. It has been revised six times in 1975,1983,1989,1996,2000 & 2008.

In 1966, the International Covenant on Civil and Political Rights declared that no one should face torture or cruel, inhuman treatment, and emphasized the need for consent in medical and scientific treatment. Dr. Henry Beecher’s 1966 study on research abuses, along with the revelation of the Tuskegee study’s unethical practices in the 1970s, heightened the push for stricter regulation of government-funded research.

- Belmont report – 1978:

It was established by the National Commission for the Protection of Human Subjects of Biomedical and Behavioral Research to outline ethical principles and guidelines for safeguarding human subjects in research. The core principles include respect for persons, beneficence, and justice.

The ICH-GCP Guidelines: The Pillar of Clinical Trials – 1966

To address international inconsistencies and improve global standards, the International Conference for Harmonisation of Technical Requirements for Registration of Pharmaceuticals for Human Use (ICH) issued the ICH Guidelines: Topic E6 for Good Clinical Practice (GCP), which were approved on July 17, 1996, and implemented from January 17, 1997

The ICH-GCP guidelines are considered the definitive framework for clinical trials and are continually evolving. Following the release of the ICH E6(R2) guidelines in 2016, which addressed advances in technology and trial complexity, the ICH introduced a draft for the ICH E6 (R3) guidelines in May 2023. These guidelines establish standards for protecting the rights, safety, and welfare of clinical trial participants while ensuring the credibility and quality of data collected.

Evolution of Clinical Trials in India

India has recently been recognized as an attractive country for clinical trials. In 1945, a Clinical Research Unit – the first research unit of IRFA attached to a medical institution- was established at the Indian Cancer Research Centre, Bombay.

In 1949, IRFA was redesignated as the Indian Council of Medical Research. The Central Ethical Committee of ICMR on Human Research constituted under the Chairmanship of Hon’ble Justice (Retired) M.N. Venkatachalam held its first meeting on September 10, 1996. The committee released Ethical Guidelines for Biomedical Research on Human Participants in 2000 which were revised in 2006. Schedule Y of Drugs and Cosmetics Act came into force in 1988 and established the regulatory guidelines for clinical trial (CT) permission.

The DCGI is the government official who grants permission for new drugs to be administered to human subjects in clinical trials conducted in the country

Meanwhile in 2001, the CDSCO released guidelines on Indian Good Clinical Practice. The central government, via the Central Drug Standard Control Organization (CDSCO) under the Ministry of Health and Family Welfare, regulates drug manufacturing, sales, clinical trials and, import and export in India.